In the digital age, as AI-generated content rapidly grows, a new breed of technology has come onto the scene: AI-detection tools, designed to spot machine-generated text. But as schools, publishers, and businesses invest in these tools, a troubling question lingers: how reliable are they? As it turns out, the very premise of using artificial intelligence to detect other AI might be flawed from the start.

Over the past year, AI-detection websites like GPTZero, Turnitin’s new AI checker, and Originality.AI have promised to weed out computer-generated writing. However, mounting evidence suggests that these tools are often inaccurate and may be flagging human writing as AI-generated and vice versa, sparking frustration and concern among students, educators, and professionals.

The surge in AI tools like OpenAI’s ChatGPT has given rise to more machine-written content across industries. From students crafting essays to content marketers generating articles, AI is now a part of everyday work. AI-detection websites aim to identify these generated texts by recognizing patterns, tone, and word usage that hint at AI writing. In theory, it sounds straightforward. But in reality, the process is much more complicated, often with disappointing results.

“AI has become so advanced that it’s extremely difficult for other AI to spot a difference between AI-generated text and human text,” explains Dr. Laura Chen, an AI researcher at Stanford University. “The result is that you get a lot of false positives and negatives– AI that incorrectly labels human work as AI-written and vice versa. It’s like a dog chasing its own tail.”

Indeed, recent studies have shown that many of these detection tools are far from foolproof. A University of Michigan study, for example, found that multiple AI-detection programs misidentified human-written essays as AI-generated nearly a quarter of the time. These errors have led to a range of consequences, from unnecessary academic penalties to confusion among professionals who are left questioning the tools they rely on.

AI detectors typically function by examining a text’s structure, grammar, and predictability. Machine-written text often follows patterns that, to an AI detector, look suspiciously regular. Certain language models generate text that might appear overly coherent or simplistic. For example, they might string sentences together in a way that’s efficient but lacks human nuance. This predictability is what detection tools are looking for, as well as repetitive phrases or syntactical structures that feel “too organized.”

However, as AI language models become more sophisticated, they’re learning to mimic human “errors,” or the natural inconsistencies that come with human writing. In other words, AI detectors are chasing an incredibly deceptive target, leading to unreliable detection rates. “The AI models we have today are more capable than ever of crafting authentic-sounding content,” says Dr. Chen. “So, asking AI to catch AI-generated content is like moving the goalposts– by the time a detection model adapts, the content generation models have already improved.”

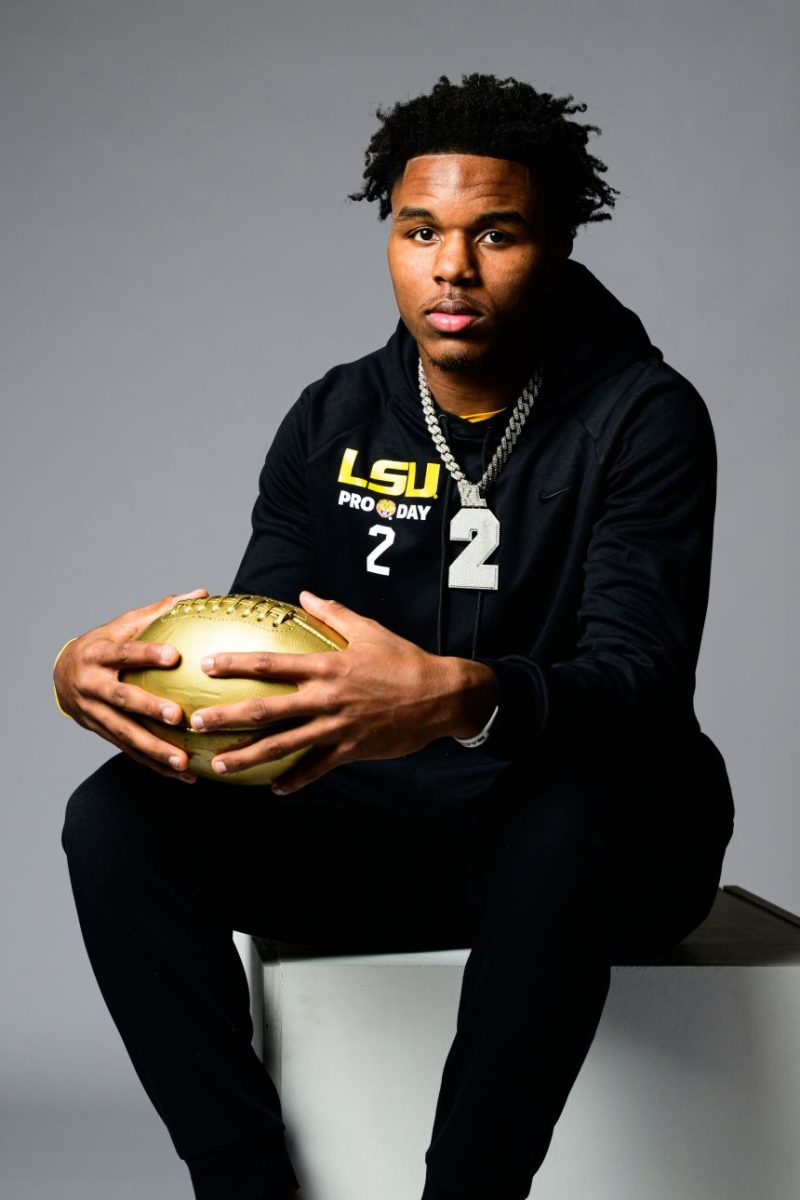

For students and professionals, the inaccuracies of AI detectors are more than a technical issue; they’re a personal and professional one. Students across the country have reported feeling singled out and unfairly accused of using AI for work they crafted themselves. Large numbers of students talk about the immense effort they put into academic essays and pieces of writing, but when submitted, AI checkers say something ridiculous, such as “This contains 90% AI,” forcing them to go through a humiliating process in order to prove it was theirs. Additionally, journalists, content writers, and even researchers who publish online are often required to run their work through these detectors to verify its authenticity. “If we rely solely on AI to make these determinations, we’re in dangerous territory,” says Professor Mark Diaz, an ethics specialist at Columbia University. “There’s a lot of room for error here, and there’s no human review in many cases. These detectors are far from perfect, yet they’re being treated as if they are.”

In response to these concerns, some experts advocate for transparency and restraint when using AI-detection tools, especially in high-stakes scenarios. Instead of relying solely on AI, some suggest that human review should accompany any flagged content. Dr. Chen and other researchers are pushing for a balanced approach, encouraging institutions to use AI detectors as a supplement, not a replacement, for traditional review processes.

Other researchers propose alternative solutions, like digital “watermarks” in AI-generated content. These watermarks would make detection simpler and more accurate, but the technology is still new, and many AI systems lack these features altogether. Until that changes, AI-detection websites may remain in this frustrating, false cycle.

Therefore, the next time a student or writer finds themselves flagged by an AI detector, it may be worth asking whether the tech is as smart as it claims to be. “AI has its place,” says Professor Diaz. “But when it comes to detecting authenticity in writing, it seems we’re asking for more than it can reliably provide. We may need to rethink our approach, and remember that, sometimes, human judgment is still our best tool.”